The Ethical Side of Data in Personalized Travel

The Promise and Peril of Personalized Travel Experiences

Harnessing Data for Tailored Adventures

Personalized travel experiences are increasingly driven by the vast amounts of data we generate online. Websites and apps meticulously track our preferences, past trips, and even our social media activity to curate bespoke itineraries. This data-driven approach allows for highly tailored recommendations, from suggesting specific restaurants to recommending off-the-beaten-path activities. The potential for a truly unique and immersive experience is undeniable, catering precisely to individual needs and desires. However, this convenience comes with a significant ethical component that must be carefully considered.

The ability to anticipate and fulfill our travel desires is a powerful tool, but the ethical implications of collecting, storing, and using this data cannot be ignored. Transparency and consent are crucial, and travelers need to be aware of how their data is being used and who has access to it. Ultimately, understanding the ethical implications of data collection is essential for ensuring that personalized travel experiences remain both enriching and responsible.

Ethical Considerations in Data Collection and Usage

The ethical use of data in the travel industry necessitates a thoughtful approach. Data privacy is paramount, and individuals should have control over their personal information. Travel companies must be transparent about their data collection practices, clearly outlining what information they collect, how it's used, and with whom it's shared. Furthermore, data security measures are essential to protect sensitive information from unauthorized access or breaches. A commitment to responsible data handling is vital to building trust and ensuring the ethical integrity of personalized travel experiences.

Beyond privacy, issues of algorithmic bias need careful consideration. If algorithms are trained on biased data, they can perpetuate harmful stereotypes and inequalities. Travel companies must work diligently to ensure their algorithms are unbiased and reflect the diversity of destinations and experiences. The potential for discrimination in recommendations based on factors like race, gender, or socioeconomic status is a serious concern that requires proactive mitigation strategies.

The Impact on Local Communities and Environments

Personalized travel, while offering unique experiences, can also have unintended consequences on local communities and the environment. The pursuit of tailored adventures can lead to an over-concentration of tourists in specific areas, potentially straining resources and disrupting local cultures. It's imperative that companies promoting personalized experiences also prioritize sustainable tourism practices and ensure that their recommendations benefit local economies and preserve the environment. This includes supporting locally owned businesses, respecting cultural norms, and minimizing the environmental footprint of travel.

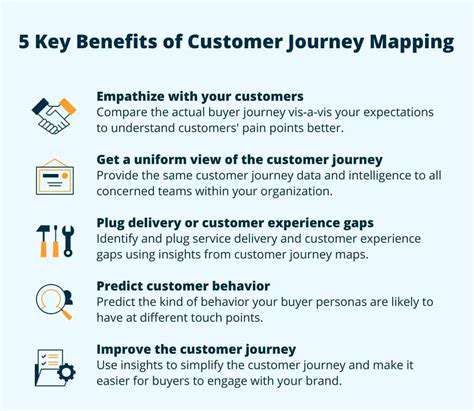

Transparency and Control in the Customer Journey

For personalized travel experiences to be truly ethical, travelers must have control over their data and understand how it's being used. Clear and concise explanations of data collection policies are essential. Users should have readily available options for opting out of data collection or modifying their preferences. This transparency fosters trust and empowers travelers to make informed decisions about their data. Ultimately, a customer-centric approach that prioritizes transparency and control is vital for the ethical development of personalized travel experiences.

Algorithmic Bias and Fairness in Personalized Recommendations

Understanding Algorithmic Bias

Algorithmic bias in personalized recommendations arises when the algorithms used to suggest items or content to users systematically favor certain groups or individuals over others. This bias can stem from various sources, including the data used to train the algorithms, the design of the algorithms themselves, and the implicit biases present in the human creators of the algorithms. Recognizing and mitigating these biases is crucial to ensure that the recommendation systems are fair and equitable for all users, promoting inclusivity and avoiding the perpetuation of existing societal inequalities.

The impact of algorithmic bias can be significant, leading to unequal access to opportunities, reinforcing stereotypes, and potentially creating a feedback loop that exacerbates existing disparities. Understanding the root causes of this bias is essential for developing effective solutions.

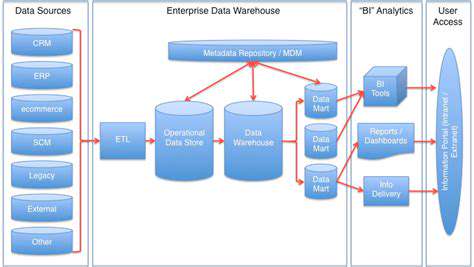

Data Bias and its Impact

The datasets used to train recommendation algorithms often reflect existing societal biases. For example, if a dataset predominantly features products or content consumed by a specific demographic, the algorithm might learn to recommend those items disproportionately to that group, potentially excluding others. This inherent bias in the data can lead to skewed recommendations, where certain user groups receive tailored experiences that are less relevant to, or even harmful to, other groups.

Consequently, the algorithm might overlook the preferences and needs of users from underrepresented groups, resulting in a less diverse and inclusive recommendation experience. Identifying and addressing these data biases is a critical first step in building fairer recommendation systems.

Algorithm Design and Fairness

The design of the recommendation algorithms themselves can contribute to algorithmic bias. Certain algorithms may be inherently more prone to bias than others, especially if they prioritize popularity or historical trends without considering the diversity of user preferences. Furthermore, the weighting of different features in the recommendation process can also introduce bias if certain features are disproportionately weighted toward certain groups.

Developing algorithms that explicitly consider fairness criteria, such as diversity and representativeness, is essential to mitigate potential biases. Techniques like fairness-aware learning and counterfactual fairness analysis can be incorporated into the design process to improve the equitable distribution of recommendations.

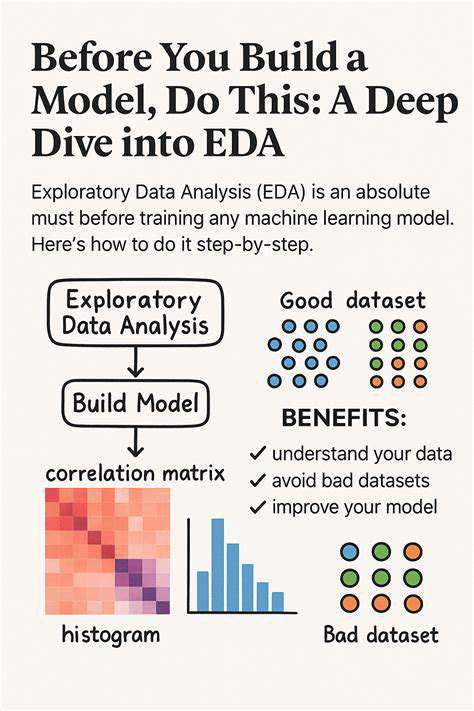

Evaluating and Monitoring Bias

It's not enough to simply build algorithms that are intended to be fair; they need to be rigorously evaluated and continuously monitored for bias. This process involves analyzing the recommendations generated by the system for patterns of bias across different user groups. Statistical methods and user feedback mechanisms can be employed to detect instances where the system is unfairly favoring or excluding certain groups.

Addressing Bias in Personalized Recommendations

Mitigating algorithmic bias in personalized recommendations requires a multi-faceted approach that tackles both the data and the algorithms. This includes diversifying training datasets, employing fairness-aware algorithms, and implementing rigorous evaluation and monitoring procedures. Furthermore, incorporating user feedback and perspectives from diverse groups can help to identify and address biases that might not be apparent through purely technical analysis.

Transparency and accountability are also key components of this process. Users should have a clear understanding of how the recommendation system works and how biases might be affecting their experience. This transparency can foster trust and encourage users to actively participate in identifying and addressing potential issues.

Ethical Implications and Responsible AI

The ethical implications of algorithmic bias in personalized recommendations extend beyond simply improving fairness. They touch upon questions of social justice, equity, and the potential for perpetuating existing inequalities. Recognizing the potential for harm and actively working to mitigate bias is crucial for building trustworthy and responsible AI systems that benefit all users.

Ultimately, addressing algorithmic bias in personalized recommendations requires a commitment to ethical considerations alongside technical expertise. This includes incorporating diverse perspectives, fostering transparency, and actively working to create a more equitable and inclusive digital ecosystem.

The Role of Transparency and Consent in Data Collection

Transparency in Decision-Making

Transparency in decision-making processes is crucial for fostering trust and accountability. It involves openly sharing information about the rationale behind decisions, the data used for analysis, and the potential impact on stakeholders. This open communication allows for greater scrutiny and feedback, leading to more informed and ultimately better decisions. Openness about the process, even when difficult, enhances the legitimacy and acceptance of those decisions.

Furthermore, transparency encourages active participation and engagement from individuals and groups who may be affected by the decisions. When stakeholders understand the reasoning behind a choice, they are better positioned to offer constructive criticism and alternative perspectives, ultimately enriching the decision-making process.

Consequences of Lack of Transparency

A lack of transparency in decision-making can have severe consequences. It breeds distrust and suspicion among stakeholders, potentially leading to conflict and disengagement. When individuals feel excluded from the decision-making process, they may become disillusioned and less likely to support the outcomes, even if the decision was ultimately beneficial.

Furthermore, a lack of transparency can hinder the identification and resolution of potential problems. Hidden agendas or undisclosed information can lead to unintended negative consequences that are difficult to rectify. This can result in a loss of efficiency and effectiveness in the long term.

Consequences of Effective Transparency

Effective transparency in decision-making processes can lead to a range of positive outcomes. It promotes trust and collaboration among stakeholders, fostering a more positive and productive working environment. This collaborative approach is crucial for achieving shared goals and objectives.

The benefits extend beyond just the immediate decision being made. Transparency fosters a culture of accountability, encouraging individuals to act responsibly and ethically. This, in turn, builds stronger relationships and a more robust organizational structure.

The Importance of Consistent Transparency

Consistency in transparency is critical for maintaining trust and credibility over time. Regular and predictable disclosure of information, regardless of the situation, reinforces the commitment to open communication and builds a foundation of trust among stakeholders. This ongoing commitment demonstrates a genuine commitment to ethical conduct and responsible governance.

In addition to building trust, consistent transparency promotes accountability and prevents the spread of misinformation or rumors. This approach significantly reduces potential conflicts and fosters a more collaborative and productive environment.

Read more about The Ethical Side of Data in Personalized Travel

Hot Recommendations

- Senior Travel Discounts and Deals

- Personalized Travel for Different Seasons and Climates

- Honeymoon Destinations: Romantic Getaways for Newlyweds

- Mythical Places: Journeys to Legendary Locales

- The Future of Travel Agents in an Automated World

- Sustainable Design for Tourist Infrastructure

- Combatting Illegal Wildlife Trade Through Travel Awareness

- The Best Beaches for Relaxation and Sunbathing

- Marine Conservation: Diving into Responsible Ocean Travel

- Measuring the Social Impact of Tourism