The Ethical Considerations of Algorithmic Bias in Automated Travel

Data Disparities and Algorithmic Discrimination

Data Collection Biases

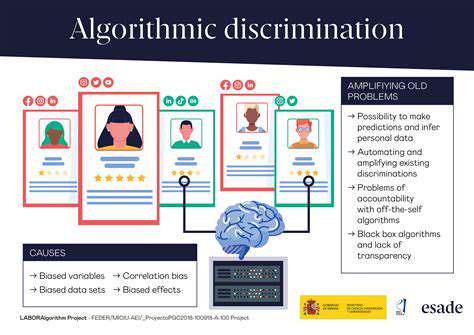

Data disparities in algorithmic decision-making often stem from biased data collection practices. These biases can be unintentional, reflecting existing societal inequalities, or they can be deliberate, with malicious intent. For instance, if a dataset used to train an algorithm for loan applications predominantly includes data from individuals with a certain socioeconomic background, the algorithm might inadvertently favor those with similar backgrounds, potentially disadvantaging others. This can perpetuate existing inequalities and lead to unfair outcomes for marginalized groups.

Furthermore, the methodology employed during data collection can introduce biases. Data collection methods that disproportionately target specific demographics or use limited, non-representative samples can produce skewed datasets that fail to reflect the broader population. This can lead to algorithms that are more effective in predicting outcomes for some groups while performing poorly for others.

Algorithmic Design Flaws

Even with unbiased data, algorithmic design can still introduce disparities. The inherent nature of some algorithms, particularly those employing complex mathematical models, can amplify existing biases or create new ones. For instance, certain machine learning models may be more susceptible to bias if the training data contains subtle but significant disparities.

Algorithmic design choices that lack consideration for fairness and equity can perpetuate existing societal inequalities. These choices may involve the selection of specific features, the weighting of different factors, or the use of particular optimization techniques. A lack of careful consideration for these choices can make algorithms vulnerable to reinforcing discriminatory patterns.

Moreover, the complex nature of many algorithms can make it challenging to understand how they arrive at their conclusions. This black box effect can make it difficult to identify and correct biases hidden within the model's inner workings. Techniques like explainable AI (XAI) are crucial to address this challenge.

Impact on Different Communities

The consequences of data disparities and algorithmic bias are often felt most acutely by marginalized communities. These communities may be disproportionately affected by unfair lending practices, biased criminal justice outcomes, or discriminatory hiring practices, all fueled by algorithms that have inadvertently or intentionally incorporated biases. These outcomes can have long-lasting and devastating effects on individuals and families.

The cumulative effect of these biases can create systemic disadvantages and perpetuate cycles of inequality. This highlights the crucial need for rigorous evaluation, transparency, and continuous monitoring of algorithms to ensure fairness and equity for all users. Failure to address these issues can have profound implications for social justice and economic opportunity.

Impact on Diverse Travelers and Accessibility

Impact on Representation and Cultural Sensitivity

Algorithmic systems, particularly those used in travel planning and booking, can inadvertently perpetuate existing biases and stereotypes. For example, if an algorithm prioritizes destinations or accommodations based on historical popularity or user reviews from predominantly one demographic, it can limit exposure to diverse experiences and potentially exclude culturally rich destinations or accommodations catering to specific needs or traditions. This could result in a homogenized travel experience, where unique cultural offerings and perspectives are overlooked, ultimately diminishing the value of travel for diverse travelers seeking authentic connections and experiences.

Furthermore, algorithms trained on limited datasets might not accurately reflect the needs and preferences of travelers from various cultural backgrounds. This can lead to recommendations that are not tailored or inclusive, causing frustration and potentially leading to a less enjoyable and equitable travel experience for diverse travelers.

Bias in Accommodation and Destination Recommendations

Algorithms used for recommending accommodations and destinations often rely on user reviews and data points that can reflect existing biases. For instance, if a particular destination or accommodation has received predominantly negative reviews from specific demographics, the algorithm might inadvertently downplay or exclude it from recommendations, even if it may be perfectly suitable for other travelers. This can further marginalize certain cultural or ethnic groups, limiting their travel options and perpetuating inequalities within the tourism industry.

Such biases within the algorithm can manifest in the form of unfairly low rankings or complete exclusion of certain types of accommodations, especially those that cater to specific cultural or religious practices or cater to specific needs, like accessibility or dietary restrictions.

Accessibility and Inclusivity for Travelers with Disabilities

Algorithms used in travel planning and booking platforms often struggle to accommodate the unique needs of travelers with disabilities. They might not effectively integrate information about accessibility features of accommodations, transportation options, or local amenities. This can result in travelers with disabilities receiving inappropriate or misleading recommendations, leading to frustration and a lack of inclusivity in the travel planning process.

Furthermore, algorithms might not account for the specific needs of travelers with disabilities, such as wheelchair accessibility, visual or auditory impairments, or mobility limitations. This can lead to a lack of suitable options for travelers with disabilities, potentially hindering their ability to enjoy a fulfilling and safe travel experience.

Algorithmic Reinforcement of Existing Inequalities

The use of algorithms in travel planning can inadvertently reinforce existing social and economic inequalities. For example, if algorithms prioritize destinations or accommodations based on price, it can limit access for travelers with lower incomes, further widening the gap between the affluent and the less affluent.

Additionally, algorithms can perpetuate existing stereotypes and biases, potentially limiting the opportunities for travelers from underrepresented communities to explore and experience a wide array of cultures and destinations. This can contribute to a lack of diversity and inclusion in the tourism industry, harming the overall experience for all.

Transparency and Control Over Algorithmic Decisions

A lack of transparency in how algorithms make decisions about travel recommendations can be problematic. Travelers may not understand the factors that influence the results, making it difficult to challenge or adjust recommendations that may not align with their needs or preferences. This lack of understanding can also lead to a sense of powerlessness over the travel experience.

Users have a right to understand the criteria used to generate recommendations. If the algorithm is biased, this lack of transparency makes it difficult to identify and address the issue, ultimately perpetuating the bias and impacting the overall experience for diverse travelers.

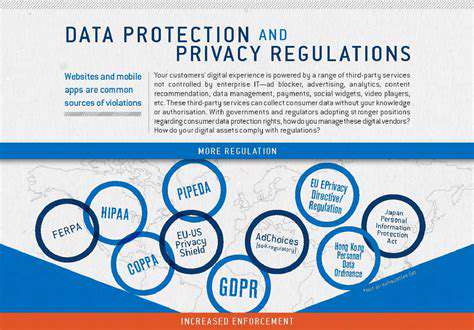

Ethical Implications for Data Collection and Use

The data used to train travel algorithms often comes from user interactions, reviews, and booking information. This data can contain sensitive personal information that may be vulnerable to misuse or misinterpretation. It's crucial to ensure that data collection and usage practices adhere to ethical guidelines and protect the privacy of travelers.

Ensuring data security and responsible data handling is essential. Data privacy considerations must be prioritized to prevent the misuse or exploitation of personal information collected from travelers, ensuring the ethical use of this sensitive data in travel algorithms.

Battery swapping, a fundamental concept in the realm of electric vehicles (EVs), centers on the quick and easy replacement of a depleted battery pack with a fully charged one. This contrasts sharply with the traditional method of plugging in an EV for hours of charging. The core principle is to maximize user convenience and minimize downtime, a critical factor in the widespread adoption of electric vehicles. This approach is particularly attractive for long-distance travel and frequent users, offering a potentially game-changing experience compared to traditional charging.

Read more about The Ethical Considerations of Algorithmic Bias in Automated Travel

Hot Recommendations

- Senior Travel Discounts and Deals

- Personalized Travel for Different Seasons and Climates

- Honeymoon Destinations: Romantic Getaways for Newlyweds

- Mythical Places: Journeys to Legendary Locales

- The Future of Travel Agents in an Automated World

- Sustainable Design for Tourist Infrastructure

- Combatting Illegal Wildlife Trade Through Travel Awareness

- The Best Beaches for Relaxation and Sunbathing

- Marine Conservation: Diving into Responsible Ocean Travel

- Measuring the Social Impact of Tourism