Building Consumer Trust Through Data Transparency

The business world witnesses a fundamental reorientation toward empirical validation across all operational dimensions. Where hunches and experience once dominated boardroom discussions, quantitative validation now underpins strategic pivots. This manifests most visibly in customer-facing functions, where every marketing dollar and product iteration undergoes rigorous performance analysis before scaling.

Modern analytics platforms uncover hidden relationships that escape human observation alone. By applying computational pattern recognition to operational datasets, companies identify micro-inefficiencies and emerging trends at their earliest detectable stages. This anticipatory capability allows for surgical adjustments that compound into significant competitive advantages, from hyper-personalized customer journeys to precision inventory management. The resulting efficiency gains frequently surprise even seasoned executives.

Resource allocation transforms from political negotiation to mathematical optimization under this model. Departments justify budgets through demonstrable ROI metrics rather than persuasive rhetoric, fostering organizational cultures where evidence consistently overrides opinion. This disciplined approach naturally surfaces best practices while systematically eliminating ineffective legacy processes.

Overcoming Challenges in Data Transformation

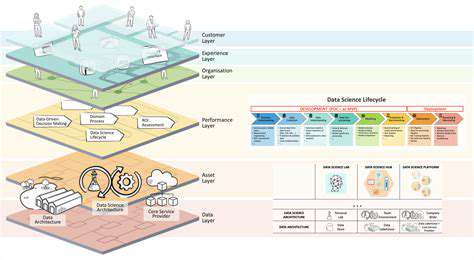

Transitioning to data-centric operations presents multifaceted obstacles that many organizations underestimate. Technical hurdles like system interoperability coexist with human resistance to abandoning familiar decision-making rituals. Perhaps most critically, ensuring information consistency across legacy and modern platforms frequently derails transformation timelines.

The talent gap represents perhaps the most persistent barrier. True data mastery requires rare hybrid professionals who combine technical prowess with business acumen. Forward-thinking companies address this through aggressive upskilling programs that transform existing employees into data-literate problem solvers. Simultaneously, they implement governance structures that maintain strict standards for information quality without stifling analytical creativity.

Successful adopters approach these challenges holistically, recognizing that technology alone cannot drive transformation. They pair cutting-edge tools with change management initiatives that make data fluency part of organizational DNA. Regular audits and iterative improvements ensure systems evolve alongside business needs rather than becoming obsolete.

Beyond the Basics: What Consumers Expect

Understanding the Data Landscape

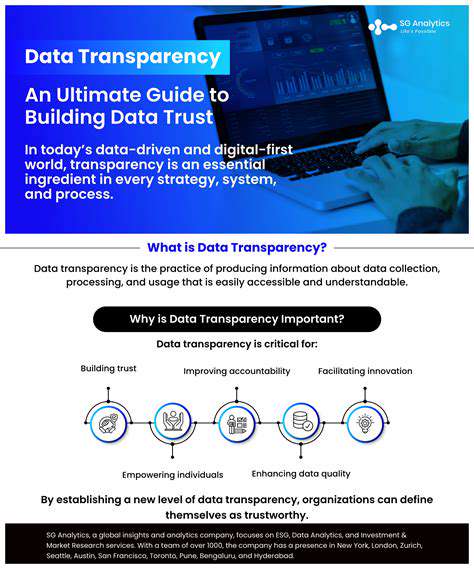

Today's consumers demonstrate unprecedented awareness about their digital footprints. This heightened consciousness, combined with high-profile privacy scandals, reshapes expectations around corporate data practices. Transparency has transitioned from nice-to-have to non-negotiable, with customers demanding clear explanations about collection methods, storage protocols, and usage boundaries.

The regulatory environment compounds this shift, with legislation like GDPR forcing businesses to formalize their data stewardship. Organizations that proactively exceed compliance requirements often discover unexpected benefits, from strengthened brand loyalty to improved data quality through consumer verification processes.

Data Security and Privacy Concerns

Cybersecurity incidents dominate headlines, making robust protection measures critical for customer acquisition and retention. Modern consumers expect enterprise-grade security for their personal information, with particular sensitivity around financial and biometric data. Companies that transparently communicate their security investments often gain measurable trust advantages.

Proactive security measures now serve as marketing differentiators rather than cost centers. Detailed breach response plans, regular third-party audits, and clear encryption standards all contribute to consumer confidence in an era where data vulnerability dominates public discourse.

Transparency and Communication

Legalese-filled privacy policies no longer satisfy increasingly sophisticated users. Consumers crave intuitive explanations about what data gets collected and why, presented through multiple accessible channels. The most trusted organizations implement layered disclosure approaches - concise summaries for casual users complemented by detailed technical documentation for privacy-conscious individuals.

Interactive preference centers represent the next evolution in transparency, allowing real-time adjustments to data sharing permissions. This granular control transforms privacy from abstract concern to tangible user empowerment, often increasing willingness to share data for personalized experiences.

The Impact of AI and Machine Learning

As algorithms influence more aspects of daily life, consumers grow wary of opaque decision-making systems. Organizations deploying AI must balance competitive advantage with ethical responsibility, particularly in sensitive domains like credit scoring or content moderation. Explainable AI frameworks that demystify automated decisions are becoming essential consumer rights.

The Role of Data in Personalized Experiences

The personalization paradox challenges modern marketers - consumers demand relevant experiences while resisting perceived surveillance. Successful navigation requires clear value exchange propositions where data sharing directly translates to tangible benefits. The most effective programs make data collection feel collaborative rather than extractive.

Seasoned practitioners recommend starting with non-sensitive preference data before requesting more personal information. This graduated approach builds trust while demonstrating the concrete advantages of shared data through increasingly tailored interactions.

Building a Culture of Trust

Sustainable data relationships require embedding privacy considerations throughout organizational culture. From engineering teams to C-suites, every function must internalize data ethics as core to business success. The most trusted brands institutionalize privacy protection through mandatory training, ethical review boards, and employee incentive structures aligned with responsible data practices.

Continuous consumer feedback loops ensure these initiatives remain grounded in real user concerns rather than theoretical ideals. Organizations that consistently demonstrate responsiveness to privacy concerns often gain permission for more ambitious data initiatives over time.

Implementing Effective Data Transparency Strategies

Data Transformation Strategies

Strategic data transformation separates superficial analytics from truly impactful business intelligence. The most effective approaches treat data refinement as continuous improvement rather than one-time projects, with iterative cycles that progressively enhance information quality and utility. This philosophy recognizes that business needs evolve alongside analytical capabilities.

Sophisticated organizations implement metadata management systems that document transformation logic, creating audit trails that improve reproducibility and regulatory compliance. These systems become particularly valuable during personnel transitions, ensuring institutional knowledge persists beyond individual contributors.

Data Cleaning Techniques

Information hygiene forms the foundation of reliable analytics, yet many organizations underestimate its complexity. Modern data cleaning extends beyond null value handling to include temporal consistency checks, cross-field validation, and anomaly detection. Advanced practitioners implement automated data quality dashboards that surface issues before they corrupt downstream analysis.

Context-aware cleaning algorithms now outperform rigid rules-based approaches, particularly when handling messy real-world data. These intelligent systems learn expected value ranges and relationships from historical patterns, flagging deviations for human review while automatically correcting trivial errors.

Data Structuring Methods

The shift toward real-time analytics demands fundamentally different data architectures than traditional batch processing. Modern systems increasingly adopt schema-on-read approaches that accommodate diverse data types without restrictive upfront modeling. This flexibility proves invaluable when dealing with rapidly evolving business requirements and emerging data sources.

Graph-based representations gain popularity for complex relationship mapping, particularly in customer journey analysis and fraud detection. These structures naturally capture the interconnected nature of modern digital interactions while enabling powerful traversal-based queries that reveal hidden network effects.

Data Enrichment Strategies

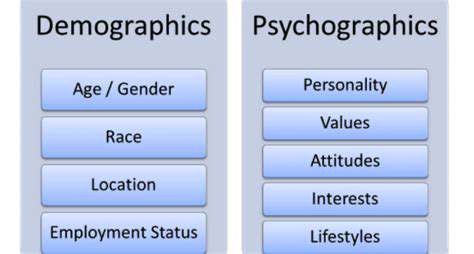

Strategic augmentation transforms basic records into multidimensional business assets. The most valuable enrichment goes beyond simple demographic appending to include behavioral predictions, propensity modeling, and network analysis. Forward-thinking organizations establish enrichment partnerships that provide mutually beneficial data exchanges rather than one-way supplementation.

Privacy-preserving techniques like differential privacy enable enrichment while minimizing personal data exposure. These approaches will become increasingly critical as regulatory scrutiny intensifies globally, allowing businesses to gain insights while respecting individual anonymity.

Data Validation and Verification

Modern validation frameworks incorporate both automated rule checks and human domain expertise. Statistical process control methods adapted from manufacturing help identify when data pipelines deviate from expected patterns. The most robust systems implement validation at multiple pipeline stages rather than just final outputs.

Performance Considerations

Cloud-native transformation tools now enable processing at previously unimaginable scales, but architectural choices significantly impact cost efficiency. Smart organizations implement progressive scaling strategies that match computational resources to current needs while maintaining expansion capacity. Serverless architectures prove particularly effective for variable workloads.

In-memory processing and columnar storage formats continue revolutionizing performance benchmarks, enabling complex analytics on commodity hardware. These technologies democratize advanced data capabilities that once required specialized infrastructure investments.

Error Handling and Management

Resilient data systems design for failure rather than perfection. Modern orchestration tools automatically retry failed operations with exponential backoff while maintaining comprehensive audit trails. Sophisticated monitoring provides real-time pipeline visibility, allowing teams to intervene before issues cascade.

Error classification systems help prioritize resolution efforts by impact severity, while root cause analysis processes prevent recurrence of systemic issues. These practices transform errors from operational frustrations into continuous improvement opportunities.