Ethical Considerations in AI Marketing

Understanding Bias Amplification

Bias amplification represents a growing concern as it magnifies and reinforces existing prejudices found in data, algorithms, or human interactions. These amplified biases can perpetuate societal inequalities and strengthen harmful stereotypes, often operating unnoticed while shaping perceptions and influencing outcomes. Recognizing how this process works is essential for creating fairer systems and reducing its negative effects.

One critical element of bias amplification lies in its subtle influence on decision-making, frequently occurring without individuals being aware of the underlying prejudices. This hidden nature makes it particularly difficult to combat, demanding proactive strategies to detect and address biases at every stage of system development and implementation.

Examples of Bias Amplification

A well-known instance of bias amplification appears in online echo chambers, where users primarily encounter content that aligns with their existing beliefs. This reinforcement of biases narrows exposure to diverse viewpoints, fostering polarization and limiting mutual understanding between groups. The algorithms powering these platforms significantly contribute to this issue, highlighting the need to evaluate their role in perpetuating societal prejudices.

Another example emerges in hiring practices. Recruitment algorithms trained on historical data reflecting gender or racial biases may perpetuate these biases in their recommendations, leading to reduced workplace diversity and fewer opportunities for qualified candidates. Tackling these biases is vital for cultivating a more inclusive and equitable professional landscape.

Mitigating the Impact of Bias Amplification

A fundamental step in addressing bias amplification involves developing more diverse and representative datasets. Incorporating a broader range of data helps identify and correct biases within algorithms and models, ensuring systems do not unintentionally reinforce societal inequalities. Integrating varied perspectives fosters a fairer and more balanced environment.

Additionally, recognizing the potential for bias amplification in various systems—whether in algorithms, datasets, or human decisions—is crucial. By understanding these sources and implementing corrective measures, we can build more equitable and inclusive frameworks.

The Role of Awareness and Education

Raising awareness about bias amplification is key to combating its effects. Educating individuals on how biases are amplified and their potential consequences empowers them to critically assess information and recognize prejudices in their own thinking and actions. Promoting awareness and education lays the foundation for a society more resilient to bias amplification.

Enhancing critical thinking and media literacy skills also equips individuals to evaluate information more effectively, enabling them to challenge biased content and develop a more nuanced worldview. This fosters a more informed and engaged public.

Privacy Concerns: Protecting Consumer Data

Protecting Consumer Data in the Digital Age

In today's digital landscape, consumers share vast amounts of personal data online, from browsing behavior to financial details. Protecting this sensitive information demands a comprehensive approach to ensure security and trust. Safeguarding consumer data is essential for maintaining confidence in online platforms. Addressing this issue requires collaboration among businesses, governments, and individuals.

While the digital age offers unparalleled convenience, it also introduces new risks. Data breaches and unauthorized access can severely impact individuals, compromising financial stability, personal reputation, and overall well-being. Prioritizing data security measures is critical to mitigating these threats.

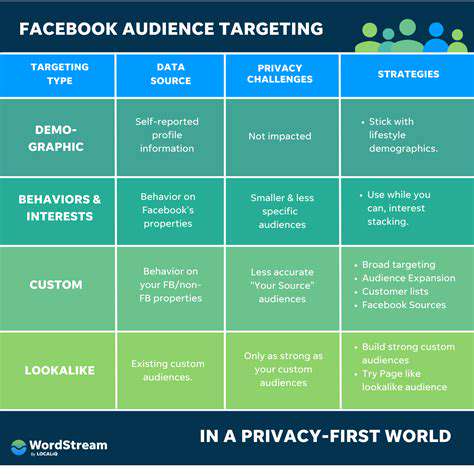

Data Collection Practices and Transparency

Transparency in data collection is crucial. Businesses must clearly outline how they gather, use, and store consumer information, ensuring individuals understand what data is collected, its purpose, and potential sharing practices. Providing options to opt-out or modify personal data enhances consumer control.

The Role of Regulations and Compliance

Government regulations like the GDPR and CCPA establish essential protections for consumer data, granting individuals greater control over their information. These frameworks set critical standards for responsible data handling. However, consistent enforcement remains vital to their effectiveness.

Cybersecurity Measures for Data Protection

Implementing strong cybersecurity protocols—such as encryption, multi-factor authentication, and regular audits—is essential to prevent unauthorized access and breaches. Data breaches carry significant financial and reputational risks for businesses. Investing in robust security measures is a necessity.

Consumer Empowerment and Rights

Consumers play a pivotal role in protecting their data. Being mindful of shared information and selecting privacy-conscious services are key steps. Understanding privacy policies before using online platforms enables informed decisions. Empowering consumers to hold businesses accountable fosters a more transparent data environment, ensuring individuals know how their data is used and what actions to take if compromised.

The automotive safety landscape is evolving with the rise of predictive safety features. These systems proactively identify and mitigate risks using advanced sensors, algorithms, and machine learning, moving beyond reactive measures. As road conditions grow more complex, predictive technologies help vehicles adapt to dynamic scenarios involving other road users and environmental factors. This shift is paving the way for enhanced accident prevention and autonomous driving capabilities.

Responsible AI Implementation: A Call to Action

Defining Responsible AI

Responsible AI extends beyond technical development, requiring a holistic approach that considers societal impacts throughout the AI lifecycle—from creation to deployment and ongoing oversight. This includes addressing biases, ensuring transparency, and maintaining public trust.

Addressing Bias and Fairness

Since AI learns from data, biases present in training datasets can lead to discriminatory outcomes in areas like hiring, lending, and criminal justice. Mitigating these biases demands careful data selection and preprocessing to promote fairness and equity.

Developing methods to detect and reduce bias—such as adversarial debiasing and fairness-aware algorithms—is essential for creating AI systems that treat all individuals impartially.

Ensuring Transparency and Explainability

Transparent AI decision-making builds trust, particularly in high-stakes fields like healthcare and finance. Explainable AI (XAI) techniques enable users to comprehend how AI reaches conclusions, fostering accountability.

Promoting Human Oversight and Control

While AI offers transformative potential, human oversight remains critical, especially in applications where errors could have severe consequences. Designing AI systems with clear accountability and human intervention capabilities ensures responsible use.

Fostering Collaboration and Education

Responsible AI requires collaboration across researchers, developers, policymakers, and the public. Open dialogue, education, and shared knowledge are key to ethical AI development and deployment.

Establishing Ethical Frameworks and Standards

As AI advances, robust ethical frameworks must address privacy, bias, accountability, and transparency. International cooperation is necessary to standardize responsible AI practices across industries and regions, ensuring the technology benefits society as a whole.

Read more about Ethical Considerations in AI Marketing

Hot Recommendations

- Senior Travel Discounts and Deals

- Personalized Travel for Different Seasons and Climates

- Honeymoon Destinations: Romantic Getaways for Newlyweds

- Mythical Places: Journeys to Legendary Locales

- The Future of Travel Agents in an Automated World

- Sustainable Design for Tourist Infrastructure

- Combatting Illegal Wildlife Trade Through Travel Awareness

- The Best Beaches for Relaxation and Sunbathing

- Marine Conservation: Diving into Responsible Ocean Travel

- Measuring the Social Impact of Tourism