AI Driven Personalization for Authentic Encounters

Understanding the Human Element

Beyond the complex algorithms and intricate code lies the crucial human element in building truly effective and impactful AI systems. Developing these systems requires a deep understanding of human needs, motivations, and biases. This understanding is essential to ensure that the AI is not only technically proficient but also ethically sound and aligned with human values.

Focusing solely on the technical aspects without considering the potential societal impact is a recipe for disaster. We must consider the potential for misuse, bias amplification, and unintended consequences of these powerful tools.

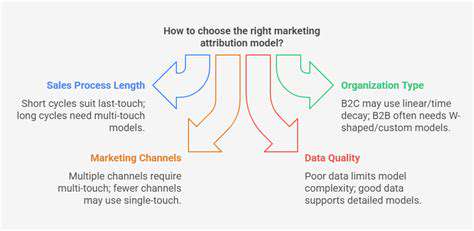

Data Quality and Integrity

Robust and reliable data is the lifeblood of any successful AI project. The quality and integrity of the data used to train and evaluate AI models directly impact the accuracy, reliability, and fairness of the system's output. Garbage in, garbage out, as the old adage goes, is particularly relevant in the context of AI.

Data collection methods must be meticulously planned, ensuring data accuracy, completeness, and ethical considerations. Data cleaning, preprocessing, and validation steps are essential to mitigate potential biases and errors that could negatively affect the AI's performance.

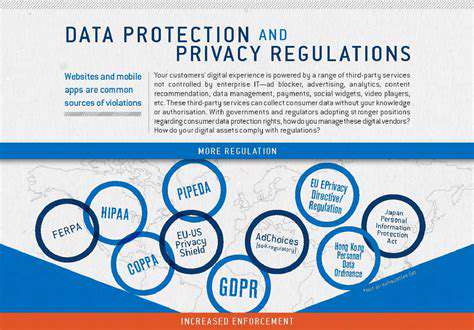

Ethical Considerations and Bias Mitigation

Ethical considerations are paramount in the development and deployment of AI systems. We need to address the potential for bias in algorithms and data, which can perpetuate and even exacerbate existing societal inequalities. Careful consideration of the ethical implications of AI use is critical to ensuring responsible development and deployment.

Transparency and explainability in AI decision-making processes are crucial for building trust and accountability. Developing methods to identify and mitigate biases in algorithms and data sets is an ongoing challenge requiring careful thought and collaboration between researchers, developers, and policymakers.

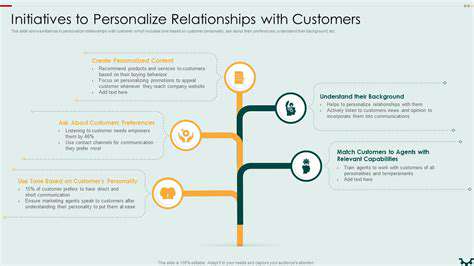

User Experience and Accessibility

The user experience (UX) of AI systems is critical for successful adoption and integration into various aspects of daily life. Intuitive interfaces and clear instructions are essential for users to interact effectively with the system and understand its capabilities. Furthermore, the system must be accessible to diverse users with varying levels of technical expertise and access needs. This includes considering users with disabilities and ensuring the system is usable by people from all backgrounds.

Collaboration and Interdisciplinary Approaches

Building truly impactful AI systems requires collaborative efforts across various disciplines. This includes bringing together experts in computer science, engineering, social sciences, ethics, and human-computer interaction. This interdisciplinary collaboration ensures a holistic approach that considers the broader societal implications of AI development and deployment.

Effective communication and knowledge sharing among these diverse groups are crucial to navigating the complexities of AI development and ensuring responsible innovation.

Continuous Monitoring and Evaluation

AI systems should not be treated as static entities. Continuous monitoring and evaluation of AI systems are essential to ensure they remain aligned with desired goals and objectives. This involves tracking performance metrics, identifying potential issues, and adapting to changing circumstances.

Regular audits and feedback mechanisms are necessary to ensure the system remains fair, accurate, and aligned with ethical principles. Proactive measures are necessary for addressing potential biases, errors, and unintended consequences as the system evolves.

Read more about AI Driven Personalization for Authentic Encounters

Hot Recommendations

- Senior Travel Discounts and Deals

- Personalized Travel for Different Seasons and Climates

- Honeymoon Destinations: Romantic Getaways for Newlyweds

- Mythical Places: Journeys to Legendary Locales

- The Future of Travel Agents in an Automated World

- Sustainable Design for Tourist Infrastructure

- Combatting Illegal Wildlife Trade Through Travel Awareness

- The Best Beaches for Relaxation and Sunbathing

- Marine Conservation: Diving into Responsible Ocean Travel

- Measuring the Social Impact of Tourism